NPU RGB-D Dataset

1. Large-scale RGB-D Dataset

In order to evaluate the RGB-D SLAM algorithms of handling large-scale sequences, we recorded this dataset which contains several sequences in the campus of Northwestern Polytechnical University with a Kinect for XBOX 360. This is a challenging dataset since it contains fast motion, rolling shutter, repetitive scenes, and even poor depth informations.

Each sequence is composed of thousands of colorful and depth image pairs which have been aligned with OpenNI and organized as the format used in TUM dataset. We provide reconstruction results of SDTAM (Semi-direct Tracking and Mapping, a RGB-D localization and mapping algorithm) and sample movies, so that users can preview the sequences before downloading.

2. Sequences

For each sequence, you can download it from this website (directly but may be slow) or BaiDu (should be much faster in China).

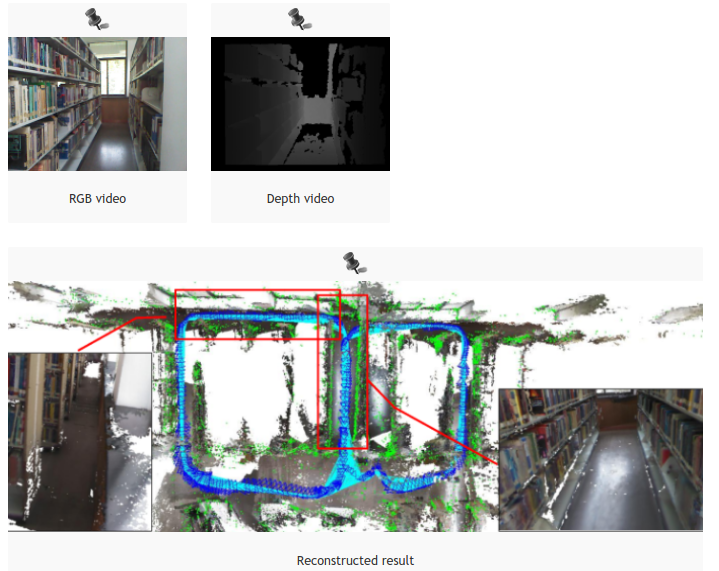

2.1. ShelvesSmall

The sequence contains two loops with some repetitive scenes and its trajectory is about 40 meters. Download the sequence as .tgz file from here or baidu cloud (file size: approx. 1.35GB).

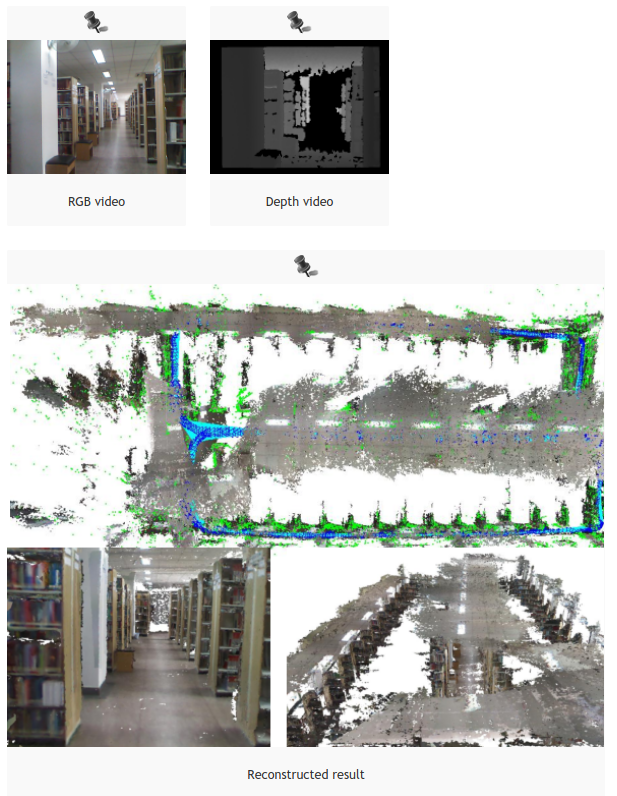

2.2. Shelves

The scene of this sequence is a large room in the library with hundreds of shelves, which is highly repetitive and the trajectory is about 100 meters long with two loops. Download the sequence as .tgz file from here or baidu cloud (file size: approx. 2.58GB).

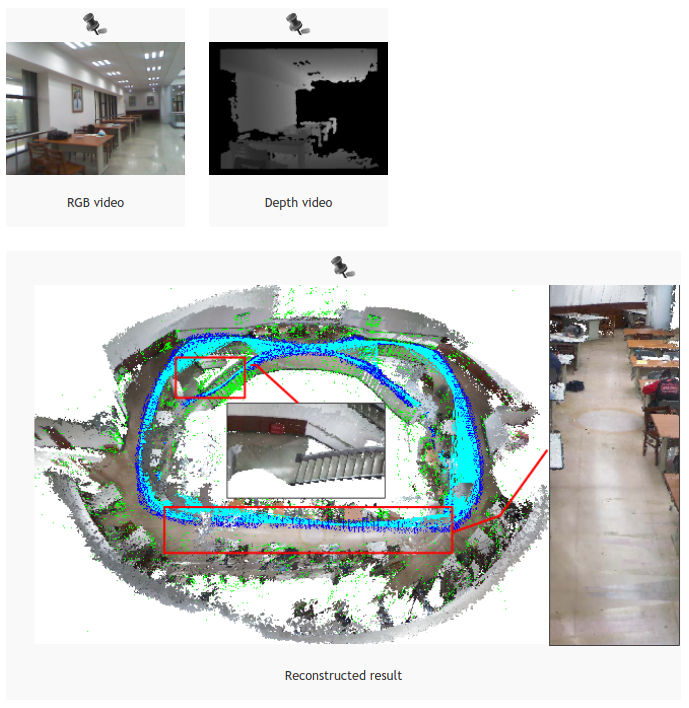

2.3. LibraryFloors

This sequence consists of two floors in the library hall with an over 100 meters long trajectory. Download the sequence as .tgz file from here or baidu cloud (file size: approx. 3.91GB).

3. Intrinsic Parameters

The Kinect has a factory calibration stored onboard, based on a high level polynomial warping function. The OpenNI driver uses this calibration for undistorting the images, and for registering the depth images (taken by the IR camera) to the RGB images. Therefore, the depth images in our datasets are reprojected into the frame of the color camera, which means that there is a 1:1 correspondence between pixels in the depth map and the color image.

The conversion from the 2D images to 3D point clouds works as follows. Note that the focal lengths (fx/fy), the optical center (cx/cy), the distortion parameters (d0-d4) and the depth correction factor are different for each camera. The Python code below illustrates how the 3D point can be computed from the pixel coordinates and the depth value:

fx = 525.0 # focal length x

fy = 525.0 # focal length y

cx = 319.5 # optical center x

cy = 239.5 # optical center y

factor = 5000 # for the 16-bit PNG files

for v in range(depth_image.height):

for u in range(depth_image.width):

Z = depth_image[v,u] / factor;

X = (u - cx) * Z / fx;

Y = (v - cy) * Z / fy;

Note that the above script uses the default (un-calibrated) intrinsic parameters. To further improve accuracy, the colorful camera is calibrated with both ATAN (used by PTAM) and OpenCV models.

The parameters file can be downloaded here. We highly recommend to load the parameters with EICAM and more details of it is introduced in EICAM: An Efficient C++ Implimentation of Different Camera Models.